Editor's Note: This article was first published on the Text IQ blog. We're publishing it again, for anyone who missed it. In 2023 and beyond, legal practitioners will continue to confront their ethical and professional obligations to stay on top of evolving technology trends.

At its 2019 annual meeting, the ABA adopted Resolution No. 112 urging courts and lawyers to address the emerging ethical and legal issues relating to the usage of artificial intelligence (AI) in the practice of law, including: bias, explainability, and transparency of automated decisions made by AI; ethical and beneficial usage of AI; and controls and oversight of AI and the vendors that provide AI.

The ABA’s AI Resolution reflects the increasing anxiety over how AI will shape the legal profession with an emphasis on the perceived risks of such technology. The concerns highlighted in the resolution mirror those raised by myriad institutions studying AI, such as the Algorithmic Justice League and the AI Now Institute, which have focused their attention on the ethical problems raised by these new technologies. But, while the risks of integrating AI into the practice of law are important to consider, the ABA’s resolution raises another equally important, though less frequently discussed question: Could it be unethical not to use AI?

Inhuman Intelligence?

In order to investigate this question, it is helpful to have a better sense of what the anthropomorphic euphemism “artificial intelligence” is really trying to convey. The “artificial” aspect of the term suggests that we are referring to something unnatural, a product of artifice, something non-human. That is the easier definition. But defining “artificial intelligence” also requires defining “intelligence.” This is a harder task.

Clearly, intelligence cannot be reduced to the ability to perform computations accurately and quickly. If this was the case, every computer could be considered to be intelligent. So how do we define intelligence? Even Merriam-Webster’s dictionary offers five different definitions of the word intelligence, and there is no universally accepted definition of the term.

Given the difficulty of defining intelligence, the majority of proposed tests for artificial intelligence avoid the question altogether. Instead, these tests focus on tasks typically viewed as requiring human intelligence (such as carrying a conversation or passing a university exam) and then assess whether the machine in question can outperform the human.

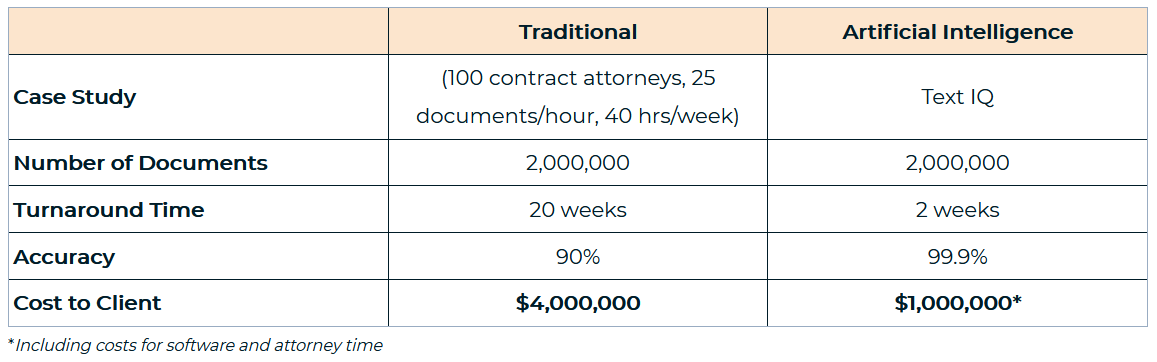

What does this mean in the context of legal practice? If we take, for example, a large antitrust matter in which one of the parties is required to review millions of documents and prevent the inadvertent production of privileged documents, what would we expect from artificial intelligence? We would likely want the machine to perform the task faster and with greater accuracy. As shown in the following table, AI delivers:

In this real-world case study, the AI-powered approach resulted in a ten-fold increase in speed (even relative to 100 contract attorneys), a 10 percent reduction of risk (i.e., the AI found all the sensitive and privileged documents that the search terms and human reviewers found as well as thousands of sensitive documents that were missed), and $3 million in cost savings to the client.

AI in the Here and Now

This type of machine-assisted lawyering is not a futuristic scenario, but increasingly part of the everyday practice of law. Even since the ABA issued its AI Resolution, the technology has evolved. For example, the ABA’s report accompanying the AI Resolution (the AI Report) describes AI used for document review as “an attorney training the computer how to categorize documents in a case” and then using “a method of predictive coding” to classify documents “after extrapolating data gathered from a sample of documents classified by the attorney.” See ABA, Report on House of Delegates Resolution No. 112 (Aug. 12–13, 2019). This is an example of what is called “supervised” machine learning.

But already, AI technology has evolved beyond this point. Unsupervised machine learning helps find previously unknown patterns in data sets without pre-existing labels. In other words, no attorney is needed to “train” the computer. The machine is able to “train itself” how to identify sensitive information that traditionally has required significant time and effort from a human or group of humans. Unsupervised machine learning (which was used in the above case study) not only removes the adoption hurdles for AI—since no “training” by the human is required—but also reduces the risk of bias because the machine can analyze the entire dataset, not just a potentially unrepresentative sample set.

Defining Technological Competence

Did you know this? Should you? And, are you ethically obligated to discuss this technology with your client? Pursuant to ABA Model Rule 1.1, “competent representation requires the legal knowledge, skill, thoroughness, and preparation reasonably necessary for the representation.” The advent of the AI era of legal practice shapes this duty in at least three ways.

First, a competent lawyer must be able to understand and explain the potential role of AI in her representation. In fact, Comment 8 to Rule 1.1 elaborates that “[t]o maintain the requisite knowledge and skill, a lawyer should keep abreast of changes in the law and its practice, including the benefits and risks associated with relevant technology … .” Undoubtedly, AI now qualifies as one such “relevant technology.” Similarly, Comment 5 to this Model Rule 1.1 explains that “[c]ompetent handling of a particular matter includes … use of methods and procedures meeting the standards of competent practitioners.” It is therefore arguably incumbent on lawyers to understand the extent of the AI technology available, and—if that technology becomes standard—to make use of it.

A lawyer’s decision whether to adopt an AI solution triggers other ethical obligations as well. For example, as the ABA notes in its AI Report, a “lawyer’s failure to use AI could implicate ABA Model Rule 1.5, which requires lawyer’s fees to be reasonable. Failing to use AI technology that materially reduces the costs of providing legal services arguably could result in a lawyer charging an unreasonable fee to a client.” Similarly, ABA Model Rule 1.3 requires a lawyer to act “with reasonable diligence and promptness in representing a client.” If an AI solution could have avoided the need to seek an extension which irks the court or delays a deal, a lawyer may have to consider whether abstaining from AI runs afoul of the promptness requirement under Rule 1.3. As the ABA AI Report explains: “The bottom line is that it is essential for lawyers to be aware of how AI can be used in their practices to the extent they have not done so yet.”

But satisfying the new requirements of competent representation will require more than simply adopting new technologies as they become increasingly mainstream. The Model Rules require attorneys not only to “keep abreast” of technological advances, but also to obtain their clients’ informed consent to use of new technologies and to supervise the use of machine assistance. Therefore, in selecting AI technologies, an attorney must consider whether she can adequately explain the process employed by that AI and its results and continue to adequately supervise the work streams she automates.

Second, the duty of competence arguably requires more than being informed about AI solutions for day-to-day tasks as counsel. AI solutions already used by commercial firms and government organizations include programs for generating sales leads on social networking platforms, running sophisticated insurance optimization algorithms, or even locating available shelter beds. The legal profession is a relatively late adopter of AI technology. To provide competent counsel, we need to be able to assess the legal risks and potential benefits these technologies present for our clients. Comment 2 to Rule 1.1 emphasizes that “perhaps the most fundamental legal skill consists of determining what kind of legal problems a situation may involve …” The novel solutions AI provides also raise a series of novel legal issues. To provide competent representation, lawyers must have the requisite technological fluency to spot those issues.

Third, the duty to keep abreast of technology may similarly require understanding of how it is being used by our courts. The use of AI in sentencing has already come under scrutiny, as highlighted by the decision of the Wisconsin Supreme Court in State v. Loomis, 881 N.W.2d 749, 767 (Wis. 2016) (evaluating the due process implications of algorithmic risk assessment in sentencing). But this is only one possible use of the technology. AI is likely to play an increasing role in resolving discovery disputes and shows promise as an early case assessment tool, which could assist in mediation programs. To competently represent a client, a lawyer likely will need to understand the evolving role AI is playing in our courts. The extent to which the judiciary and other government adjudicative bodies will adopt this technology remains to be seen, though the ABA has included the judiciary in its call to action.

What is clear is that our understanding of “competence” must evolve as AI technology does. As the ABA AI Report explains, “AI allows lawyers to provide better, faster, and more efficient legal services to companies and organizations. The end result is that lawyers using AI are better counselors for their clients.” To realize that result, we must actively engage with the ethical questions posed by our adoption of AI, including the question of whether it is ethical not to make use of these new technologies.

Thanks to Amy Endicott, attorney at Arnold & Porter, for co-authoring this article, as well as to Kelly Clay, AGC at GlaxoSmithKline, for inspiring this article.

Artwork for this article was created by Kael Rose.